The Gap Between Apple’s Privacy Promises and Practices

If you’ve watched any Apple marketing over the past decade, you’ve undoubtedly seen their carefully crafted image as the tech company that actually cares about your privacy. “What happens on your iPhone, stays on your iPhone,” they proudly proclaim. “Privacy. That’s iPhone,” their billboards declare in bold, confident typography.

The messaging is clear: while Google, Facebook, and other tech giants are harvesting your data, Apple positions itself as the noble guardian of your digital life—a sanctuary where your personal information remains untouched and sacred.

It’s a compelling narrative. So compelling, in fact, that millions of consumers pay premium prices for Apple products partly because of this perceived privacy advantage. This perception has become so ingrained in our collective consciousness that many users genuinely believe their iPhones, MacBooks, and other Apple devices are fundamentally more private and secure than alternatives.

But this entire narrative is built on marketing sleight of hand rather than technical reality.

The EU Compliance Controversy: A Crack in the Facade

The recent controversy surrounding Apple’s compliance with EU mandates has opened yet another window into the disconnect between Apple’s privacy rhetoric and their actual business practices. While Apple initially resisted EU demands to open their ecosystem, citing privacy and security concerns, their eventual compliance raises serious questions about their true commitment to user privacy when faced with regulatory pressure.

One of the most prominent examples of this disparity is the ongoing legal challenge Apple faces regarding the UK’s Investigatory Powers Act (IPA). The UK government has ordered Apple to create a backdoor into its encrypted iCloud services, ostensibly to assist in national security and law enforcement efforts. In response, Apple has argued that complying with this request would compromise its commitment to user privacy and security.

While Apple has not yet complied with the demand to create a backdoor, it has taken a significant step that some see as partial compliance: the company withdrew its Advanced Data Protection (ADP) feature from the UK market. This feature, which provided end-to-end encryption for iCloud backups, was a key privacy measure.

By removing ADP, Apple allowed the UK government greater access to iCloud data, demonstrating the tension between its public privacy promises and the legal pressures it faces.

This situation highlights a growing pattern where Apple’s actions don’t fully align with its rhetoric about privacy. While it advertises its systems as encrypted and secure, the company has shown willingness to adapt its privacy features when faced with external legal demands. The withdrawal of ADP from the UK market, though framed as a temporary measure, exposes a vulnerability in Apple’s privacy defenses that users may not have anticipated.

Furthermore, the company’s refusal to fully comply with the backdoor request underscores the ongoing conflict between maintaining user privacy and complying with government surveillance orders. Apple’s approach seems to be a balancing act between protecting user data and ensuring continued access to key markets, leaving users to question whether privacy is truly as protected as the marketing suggests.

This disconnect is also evident in Apple’s handling of data security vulnerabilities. Reports of flaws in Apple’s M1, M2, and M3 chips have raised concerns that even the most secure devices can be compromised. These vulnerabilities allow hackers to extract sensitive data, including cryptographic keys, undermining Apple’s claims of robust privacy protection.

Despite these revelations, Apple has been slow to address the issues, further highlighting the gap between the company’s promises and its ability to safeguard user information. Combined with the company’s legal maneuvering in response to privacy demands from governments like the UK, these actions paint a picture of a company that, while positioning itself as a privacy leader, may be more willing to compromise on those principles when legal or business interests are at stake.

This pattern of resistance followed by quiet compliance isn’t new. It represents a broader strategy: maintain the public image of privacy advocacy while making the necessary concessions behind closed doors when business interests demand it.

The Surveillance Expands: From Monitoring Your Devices to Monitoring Your Body

If you thought Apple’s data collection was limited to your digital activities, think again. The company’s ambitions extend directly to monitoring your physical body and even your brainwaves.

The Apple Watch exemplifies this bodily surveillance, operating as a sophisticated biometric data collection device disguised as a convenient wearable. Most users remain unaware of just how extensive this monitoring is. The Apple Watch tracks heart rate, blood oxygen levels, sleep patterns, menstrual cycles, and physical movements with clinical precision. This isn’t just basic step counting – it’s continuous physiological monitoring that creates a comprehensive profile of your body’s patterns and responses.

What Apple doesn’t prominently advertise is how this treasure trove of intimate health data gets monetized. While Apple promotes privacy features on the surface, the company’s terms allow them to share “de-identified” health data with partners – a distinction that provides little practical protection as research has repeatedly shown that de-identified data can be easily re-identified when combined with other data sets. Additionally, Apple has partnered with health insurance companies through programs like “Apple Watch Connected,” creating concerning scenarios where your most personal bodily metrics could influence insurance premiums or coverage decisions.

Most concerning is how this biometric data collection has been normalized through gamification and “wellness” marketing. The Apple Watch has transformed continuous corporate surveillance of your body into something users not only accept but actively desire and pay handsomely for. Many users wear their Apple Watch 24/7, giving the company unprecedented access to monitor sleep patterns, stress responses, and even detect subtle changes in physical condition – all while maintaining absolute opacity about how this data might be used beyond the immediate fitness applications.

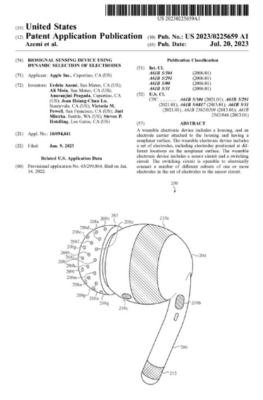

A 2023 Apple patent titled “BIOSIGNAL SENSING DEVICE USING DYNAMIC SELECTION OF ELECTRODES” (US20230225659) reveals plans for AirPods equipped with electrodes capable of measuring biosignals, including electroencephalography (EEG) – essentially, your brain activity. This patent describes technology that can actively select and adjust electrodes to optimize the reception of your neural signals, monitoring your cognitive states and brainwave patterns while the device sits in your ears.

Let’s be absolutely clear about how egregious this is: Apple is developing technology to read your brain activity through devices you casually wear throughout your day. The same company that routes your messages through its servers, maintains backdoors in its operating systems, and complies with government demands for user data now wants direct access to your neurological signals.

While Apple will undoubtedly market this as a health and wellness feature, the privacy implications are staggering. Your neurological responses reveal information about your emotional states, attention levels, and potentially even your reactions to content you’re consuming. This is data that reveals far more about you than even your explicit communications – it’s a window into your unconscious responses and cognitive processing.

Imagine the targeting possibilities for advertisers who gain access to data about which content literally stimulates your brain most effectively. Consider the surveillance potential for monitoring your neurological responses to political content. Think about the possibility of real-time manipulation by subtle adjustments to content based on your brain’s responses.

This patent represents the ultimate invasion – moving beyond monitoring your online behavior to monitoring the internal workings of your mind itself. And it comes from a company with a proven track record of compromising user privacy when it’s financially or politically expedient to do so.

The Court Cases Apple Doesn’t Want You to Know About

Marketing claims are one thing; legal proceedings are quite another. Over the past two years alone, a series of court cases have revealed troubling discrepancies between Apple’s privacy promises and their actual data practices.

Apple’s marketing claims of prioritizing privacy stand in stark contrast to its real-world practices, which raise significant concerns. For instance, the Cima v. Apple lawsuit accuses Apple of deceptive privacy policies, where the company continues to collect data despite users opting out, directly undermining its privacy promises (Source). Similarly, the revelation about Apple’s collaboration with governments to share push notification data highlights the tension between public assurances and private compliance with legal demands (Source).

Apple’s iCloud storage, which holds sensitive user data, is also clearly vulnerable to government access under legal pressures. This contradiction highlights that, despite claims of being privacy-centric, Apple’s systems still allow substantial access by external entities, including law enforcement (Source). These gaps in privacy protection suggest that Apple’s marketing slogan of “privacy is a fundamental human right” does not align with the actual data practices behind its sleek, privacy-focused interface.

These legal proceedings paint a very different picture than Apple’s carefully crafted marketing narrative. They suggest a company that, while perhaps better than some competitors on certain privacy metrics, is far from the privacy champion they portray themselves to be.

“Hey Siri, Are You Always Listening?” The Evidence Says Yes

Perhaps one of the most disturbing revelations comes from user experiences that directly contradict Apple’s claims about how their devices handle audio monitoring.

Consider the experiences of three families I have personally worked with who independently conducted a simple experiment with their Apple devices. One family from Washington State, powered down all their iPhones and MacBooks completely before repeatedly discussing their interest in “buying a new home in Tennessee” throughout the day. Despite confirming their devices were fully turned off, when they powered everything back on the following morning, their social media feeds and news sites were flooded with real estate listings in Nashville and Knoxville, mortgage calculators, and Tennessee relocation guides—topics they had never previously searched for or discussed online.

Another family from Florida conducted a similar test with the phrase “crockpots,” mentioning slow cookers and specific recipes they wanted to try in a new crockpot while their powered-down Apple devices sat on the kitchen counter. Within hours of turning their devices back on, ads for various brands of slow cookers dominated their browsing experience. Meanwhile, yet another family I talked with late last year tried the same experiment, deliberately discussing “getting a new golden doodle for the family” near their switched-off iPhones and iPads. The next day, their feeds were saturated with ads for golden doodle breeders, puppy food, and dog training services—despite having no prior digital footprint related to pet ownership or that specific breed.

In each case, the families confirmed their devices were completely powered down, yet somehow the content of their private conversations materialized in their ad experiences when the devices were reactivated.

These aren’t isolated incidents. The pattern is clear and repeatable: conversations occurring while Apple devices are supposedly powered down somehow influence the advertisements that appear once those devices are powered back on.

The technical explanation for how this is possible is concerning. Either:

- Apple devices aren’t truly “off” when powered down and continue monitoring audio

- Apple is collecting and utilizing audio data from other nearby devices

- Apple is receiving this data through partnerships with other companies who are monitoring these conversations

None of these explanations align with Apple’s privacy promises.

The Technical Reality Behind Apple’s Privacy Claims

Beyond the marketing and legal battles lies the technical reality of how Apple’s ecosystem actually functions. While Apple has implemented some genuine privacy features—like App Tracking Transparency and Mail Privacy Protection—a deeper examination reveals that their overall approach to privacy is far more complex and compromised than they suggest.

iCloud Backups: The Privacy Backdoor

Most iPhone users back up their devices to iCloud, believing their data remains protected. What Apple doesn’t emphasize is that iCloud backups are not end-to-end encrypted by default. This means Apple maintains the ability to access most of your data and can provide it to law enforcement or governments when requested.

This isn’t speculation—Apple regularly complies with such requests, providing user data from iCloud backups that includes messages, photos, and other sensitive information. A company truly committed to privacy as its highest value would have implemented end-to-end encryption for all user data years ago.

Siri and Voice Recognition: Who’s Really Listening?

Apple’s voice assistant Siri presents another privacy contradiction. While Apple claims to prioritize on-device processing for privacy reasons, they’ve admitted to maintaining systems where human contractors reviewed Siri recordings—sometimes capturing intimate moments without users’ knowledge. Only after public outcry did Apple make changes to this program, so they have told us.

The Black Box: Apple’s Closed-Source Approach to “Security”

Perhaps one of the most fundamental privacy issues with Apple products is their entirely closed-source operating systems. Unlike open-source alternatives where security researchers can freely examine code to verify security claims and identify vulnerabilities, Apple’s operating systems are black boxes, requiring users to simply trust Apple’s assurances about what their devices are actually doing.

This closed approach creates the perfect environment for implementing backdoors and data collection mechanisms that users cannot detect or disable. Intelligence agencies and Apple’s partners can be granted access points that remain invisible to the average user—or even to security researchers. Without transparency, verification is impossible.

The “security by obscurity” approach Apple employs might have made sense during their minority market position decades ago, but it’s fundamentally at odds with modern security best practices, which recognize that truly secure systems should be secure even when their code is publicly available for scrutiny.

The App Store Ecosystem: Privacy or Control and Censorship?

Apple often justifies its tight control over the App Store as necessary for user privacy and security. However, this same control allows Apple to collect a percentage of all transactions and maintain monopolistic practices. The question becomes: is Apple’s “walled garden” truly about protecting users, or about protecting Apple’s business model and controlling what information you can access?

Apple often justifies its tight control over the App Store as necessary for user privacy and security. However, this same control allows Apple to collect a percentage of all transactions and maintain monopolistic practices. The question becomes: is Apple’s “walled garden” truly about protecting users, or about protecting Apple’s business model and controlling what information you can access?

The reality is Apple’s App Store policies go far beyond security concerns and routinely censor applications based on political viewpoints and narratives that challenge mainstream positions.

Consider these examples:

Apple’s actions regarding the App Store have raised concerns about the company’s commitment to free speech, with accusations of political bias and selective enforcement of content guidelines. The tech giant has faced significant controversy for removing apps associated with certain political ideologies, particularly those seen as right-leaning or controversial. One notable example is Parler, a conservative social media platform, which was removed in January 2021 after the U.S. Capitol incident. Critics argued that Apple was targeting a platform with right-wing ideologies, even though other platforms with similar content remained accessible. Parler was eventually reinstated after adjusting its content moderation practices, but the incident sparked debates about Apple’s role in controlling political discourse.

Similarly, Telegram, an encrypted messaging app, has been threatened with removal multiple times due to content concerns. While Telegram addressed the issues and was reinstated, the swift action raised concerns that Apple was overly eager to suppress platforms that allowed for less stringent content moderation. Apple’s actions have also extended to apps tied to far-right ideologies and extremist content, effectively determining which political perspectives can be easily accessed through their ecosystem.

One of the most contentious issues Apple faced was its removal of apps used by pro-democracy protesters in Hong Kong during the 2019 protests. These apps were critical for organizing protests and tracking police movements. Apple justified the removals by citing local laws in China, but critics argued that the company prioritized its business interests over its users’ right to free expression, especially as it faced pressure from the Chinese government.

This pattern extends to COVID-19 information as well. Multiple applications that questioned official narratives or provided alternative data analysis were systematically removed from the App Store under the nebulous banner of “misinformation,” even when these apps were presenting factual data from credible sources that simply contradicted the preferred narrative.

When one company controls what software you can install on your device, they’re not just protecting you from malware—they’re determining what information you’re allowed to access and which viewpoints you’re permitted to explore.

This level of thought control should concern anyone who values intellectual freedom, regardless of their political positions.

Following the Money: Apple’s True Privacy Incentives

As my father taught me years ago about understanding market behavior: “Follow the money. Learn who the majority shareholders and creditors are and, once identified, learn their ethos and what circles these individuals run in.”

As of December 30, 2024, the largest shareholders of Apple Inc. are:

- The Vanguard Group: Holds approximately 1.4 billion shares, representing 9.29% of total shares outstanding.

- BlackRock: Owns about 1.12 billion shares, accounting for 7.48% of shares outstanding.

- State Street Corporation: Possesses roughly 595.5 million shares, equating to 3.96% of shares outstanding.

- Fidelity Investments: Controls approximately 341.64 million shares, or 2.27% of shares outstanding.

- Geode Capital Management: Manages around 340.16 million shares, making up 2.26% of shares outstanding.

- Berkshire Hathaway: Holds about 300 million shares, representing 2% of shares outstanding.

- Morgan Stanley: Owns approximately 238.26 million shares, accounting for 1.59% of shares outstanding.

- T. Rowe Price: Possesses around 220.11 million shares, equating to 1.47% of shares outstanding.

- Norges Bank: Controls about 187.16 million shares, representing 1.25% of shares outstanding.

- JPMorgan Chase: Manages roughly 183.01 million shares, making up 1.22% of shares outstanding.

As of January 20, 2023, Apple’s Board of Directors comprises:

- Arthur D. Levinson: Chairman; former CEO of Genentech.

- Tim Cook: CEO of Apple.

- James A. Bell: Former CFO of Boeing.

- Alex Gorsky: CEO of Johnson & Johnson.

- Andrea Jung: Former CEO of Avon Products.

- Monica Lozano: CEO and Publisher of La Opinión.

- Ronald Sugar: Former CEO of Northrop Grumman.

- Susan Wagner: Co-founder and Director of BlackRock.

Anyone remotely paying attention to who the global power brokers are, will quickly deduce what’s concerning about the information above. Also, not surprisingly, the top shareholders in Google, Facebook and nearly every other major BigTech company are nearly the exact same to include every major financial, pharmaceutical, insurance, food… company.

The Awakening: Recognizing Reality Over Marketing

Just as with other areas of our society, many of us have been lulled into a false sense of security by clever marketing and comforting narratives. The illusion that our digital lives are private when using Apple products is precisely that—an illusion. The evidence, when examined honestly, reveals a company making calculated business decisions like any other, not a privacy crusader.

This isn’t to say Apple is necessarily worse than competitors—in some ways, they may indeed offer marginally better privacy protections. But the gulf between their marketing claims and their actual practices represents a form of deception that consumers deserve to understand.

The “Apple Tax” on Your Privacy: Messages, Calls, and Shadow iCloud Accounts

One of the most surprising aspects of Apple’s ecosystem for many users is the discovery that all iMessage communications and even standard text messages on iPhones are routed through Apple’s servers. This isn’t just a technical implementation detail—it’s a fundamental privacy vulnerability that gives Apple access to your communications.

Even more troubling is how Apple handles the transition away from their ecosystem. Many former iPhone users discover, much to their dismay, that their text messages continue to be intercepted by Apple long after switching to a new device. This happens because Apple’s system doesn’t automatically release your phone number from their messaging system, effectively holding your communications hostage to their ecosystem.

Additionally, many users are shocked to discover they have “shadow” iCloud accounts they never intentionally created. Apple’s aggressive push toward cloud services means many users are backing up sensitive data to Apple’s servers without explicit knowledge or consent. During device setup and software updates, options to enable iCloud services are often presented as recommended defaults, leading users to unwittingly share their data with Apple.

When attempting to leave the Apple ecosystem, these users suddenly discover just how deeply entangled their digital lives have become with Apple’s servers—and how difficult Apple makes it to fully escape their data collection mechanisms.

From Security Niche to Prime Target: Apple’s Changing Security Landscape

There was a time when Apple products genuinely offered some security benefits simply by virtue of being less popular targets for hackers. When Apple held a small market share, malicious actors focused their efforts on the more prevalent Windows systems.

That advantage has long since evaporated. With Apple devices now commanding over 60% market share among U.S. business professionals and technology workers, they’ve become prime targets for sophisticated attacks. Today’s hackers are well aware that high-value targets—executives, wealthy individuals, and technology professionals—disproportionately use Apple products, making these devices increasingly attractive for exploitation.

Despite this fundamental shift in the threat landscape, Apple continues to market their products as inherently more secure, relying on outdated perceptions rather than current reality.

Breaking Free: Steps Toward Actual Digital Privacy

If you’re concerned about genuine privacy protection, here are steps that go beyond simply trusting Apple’s marketing:

While Still Using Apple Products:

- Disable iCloud backups and use local encrypted backups instead.

- Enable end-to-end encryption wherever available in Apple services.

- Review all privacy settings on your Apple devices regularly, not just when setting up the device.

- Turn off message forwarding to prevent interception of your communications.

- Manually verify which data is being stored in iCloud and remove sensitive information.

- Use a Mic-Lock to prevent the device from listening to your conversations all day and night

Moving Beyond the Apple Ecosystem:

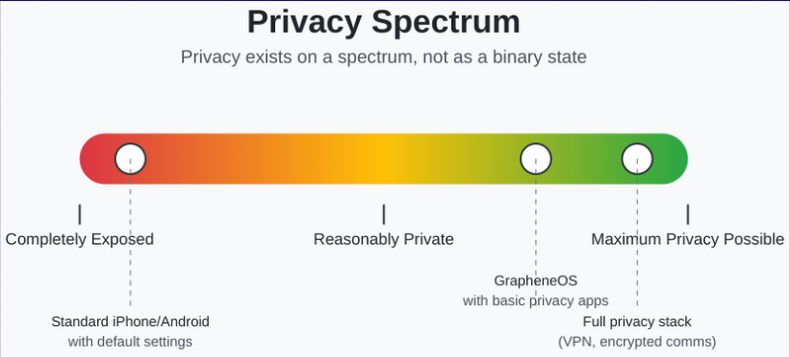

- Consider open-source alternatives like GrapheneOS for mobile devices and Linux distributions for laptops and desktops. These systems provide transparency through openly auditable code.

- Be prepared for an adjustment period when transitioning to open-source alternatives. While these platforms may not offer the same polished user experience initially, they are continuously improving and already provide viable alternatives for most common tasks.

- Adopt truly secure messaging platforms that don’t route through centralized servers and offer actual end-to-end encryption by default.

- When leaving the Apple ecosystem, explicitly request that Apple disassociate your phone number from their messaging system to prevent communication hijacking.

- Pressure Apple for change by actively requesting stronger privacy protections and true end-to-end encryption for all services.

- Stay informed about actual privacy practices, not just marketing claims.

Conclusion: No More Excuses – The Time for Digital Sovereignty Is Now

Simply put, Apple’s privacy claims collapse under scrutiny.

The evidence from court cases, user experiences, and technical analysis reveals a company making business decisions first, with privacy considerations secondary.

This doesn’t mean we should abandon technology or live in fear. Rather, it means we must approach the digital world with open eyes, critical thinking, and a willingness to look beyond marketing narratives. Understanding the reality of how our data is handled—even by companies claiming to protect it—is the first step toward making truly informed choices about our digital lives.

The time for comfortable ignorance has passed. The time for excuses has ended. If you claim to value free speech, privacy, and security while continuing to use products designed to monitor, control, and censor you, you’re not just part of the problem—you’re funding it.

No more excuses.

No more “but it’s convenient.”

No more “but I’m already in their ecosystem.”

No more “but everyone else uses it.”

No more “but the alternatives aren’t as polished.”

The open-source movement represents an alternative path—one where technology serves its users rather than treating them as products. While transitioning to GrapheneOS on mobile or Linux on desktops may involve a learning curve and some initial inconvenience, the privacy and sovereignty benefits are substantial. These tools are rapidly evolving, becoming more user-friendly each year, while maintaining the fundamental transparency that closed systems like Apple’s inherently lack.

Would you rather experience a brief period of adjustment while learning new tools, or continue sacrificing your privacy and digital autonomy indefinitely?

The choice between convenience and privacy is real, but the gap is narrowing. By supporting open-source alternatives today, you not only protect your own privacy but help build a future where security and usability coexist.

In a world where data has become the most valuable commodity, we can no longer afford the luxury of comforting illusions. The time has come to demand actual privacy, not just privacy marketing—and to recognize that sometimes, true privacy requires us to step outside the walled gardens built by companies whose business models fundamentally depend on monitoring and controlling our digital lives.

Ask yourself: Do your actions align with your stated values? If you claim to champion freedom while voluntarily handing your digital life to companies that actively undermine that freedom, it’s time for a change.

Again, NO MORE EXCUSES.

We need more than social media complaints and dinner table discussions about privacy invasion. We need action.

We need users willing to vote with their wallets and their time. Every person who transitions to privacy-respecting alternatives increases the demand for better open-source tools, applications, and devices. Your individual choice creates market pressure that drives innovation in the privacy space.

“Be the change you wish to see in the world” isn’t just a feel-good slogan to post on social media—it’s a lifestyle that requires commitment and sometimes sacrifice. The revolution in digital privacy won’t come from hoping that Apple suddenly decides to prioritize your rights over their profits. It will come from individuals making conscious choices that align with their values, even when those choices aren’t the easiest path.

The parallel economy of truly privacy-respecting technology is growing. Will you be part of building it, or will you continue funding the very systems designed to monitor, control, and ultimately exploit you?

The choice, and the responsibility, is yours.

-Sean Patrick Tario

MARK37.COM